I’ve spent the last couple of weeks trying to create a Nuke CopyCat model that can assist with Colour Matching two images, where a child shot matches a hero.

Matching a reference image is a key compositing task, that requires a fair about of practice to train one’s eye to achieve professionally, so it seemed a valuable thing to research in-between shows. NukeX’s has it’s MatchGrade node but it’s not accurate at creating CDL’s , and it’s unable to match frames from the same sequence that are different.

Reversible 2d luts (CDL) are required in VFX workflows, because every sequence with CG needs to have all the plates graded so they consistently match one hero shot. This then results in a single lighting setup being used for the whole sequence, rather each shot being lit individually to their corresponding plate. This improves technical consistency and efficiency, so there is more time to work on creative detail..

I asked on AcesCentral if anyone else knew how to do this and was pointed to the amazing a product called http://colourlab.ai that works in Resolve. After testing I highly recommend this product, and concluded that this product could speed up the process 10+ fold. But…. currently it had no CDL export or ACEScg working space. So it was not yet useful in the vfx industry for neutral grade process.

I did an initial test to see if copyCat could predict only a multiply between two similar images with different exposures, and found that it could to a high degree of accuracy, that was visually identical. This was already better than the matchGrade Node when producing a CDL / 2d lut.

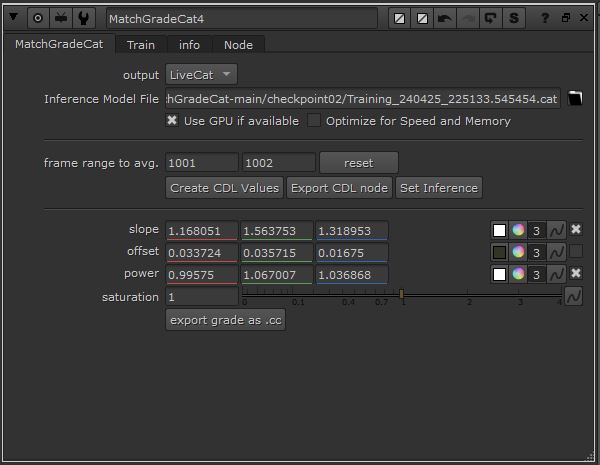

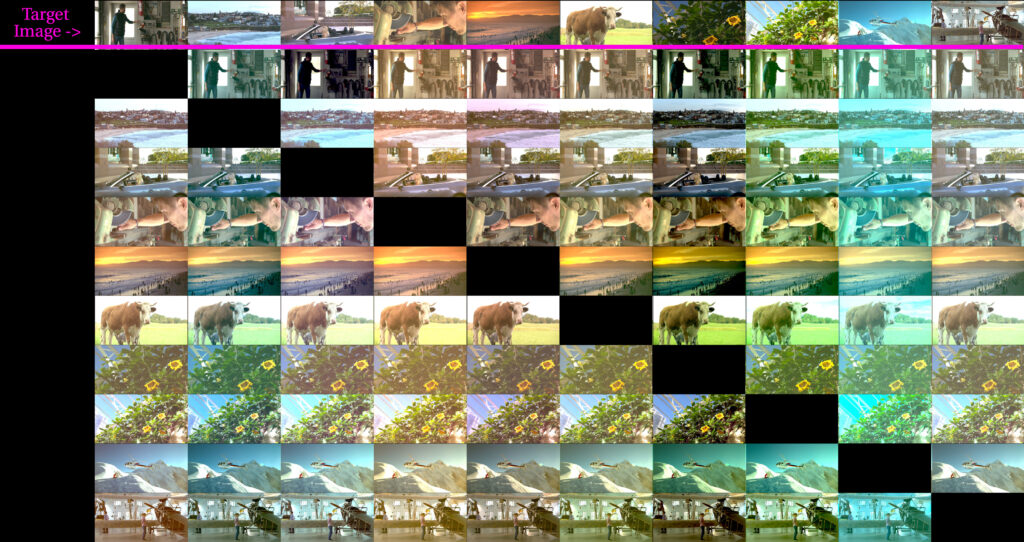

This image below is of a full multiply/add/gamma “checkpoint 2” model It shows that even if the frames are not identical the matching process stills works. However with this “slope|offset|power” model there are sometimes inconsistencies(see top right skin tone). Further training specify to the target and source images you are using improves this. (see bellow)

Instructions

Download the latest Nuke ‘matchGradeCat.nk’ and model ‘.cat’ file from github

Basic setup:

- Load the inference file, and connect the hero image to the ‘target’ input and the child image to the ‘source’. These images needs to be in nukes default AcesCG colourspace.

- Set the frame range to use to calculate the CDL too at least two frames

- If the ‘output’ is set to ‘LiveCat’: you should see the resulting image matched

- You can toggle the slope|offset|power on and off to see their contribution

- Click CreateCDL Values

- Either select ‘output’ to ‘CDL’ or click ‘Export CDL values’ to see the image through a CDL node.

Further training:

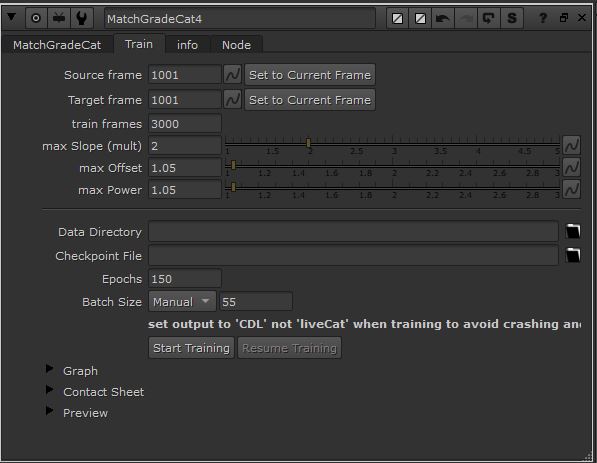

As this model was trained on a limited set of images the result can be further improved by training a new model with the images you want to use it on, here is an example how you can improve the result with your own images with just 10 min of training:

- Select two frames for your source and target. (note these do not act as source/target when training, but just are two images that are different in your sequence i.e. wideshot and closeup)

- Select the max required range of CDL slope/offset/power values. The smaller this is the shorter you can train the model

- Create a data directory folder

- Dowload an inference model file checkpoint from link above and add the path to ‘Checkpoint File’

- Click start training (you will need an OK GPU)

- On the main tab: Add the Cat you have just made to the ‘Inference Model File’ path

Model Training / Methodology

CopyCat is not specifically designed for generating the data, but rather images, so this project was bit of a challenge. Look inside the MatchGradeCat node if you want to see how i did this train. Generally:

- Trained using freely available ARRI camera test footage of the most common cameras in the industry, I generated 1200×1200 AP0 images of 32×32 random crops of images.

- I then used this data to train a model to predict the x9 float variables of a CDL node.

- CopyCats inference node compares the images to match and creates the CDL values. As my training dataset was limited (particularly for on-set/ chroma-screen images), you might have to use my model as an initial weight and train the cat on a selection of images from your sequence.

Caveats & WIP

This is not a production ready tool and needs some more time spent on it.

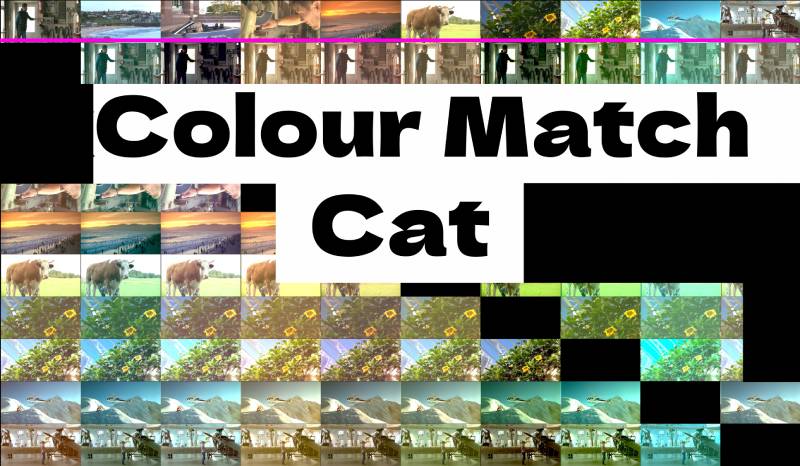

Here is a test of some wildly different images in a grid to see the results, to identify where the model currently incorrectly guesses the CDL:

Click to see full-size

As you can see there are two main issues:

- The add/offset value is often too much. (turning this off, with the “Checkpoint 2” model, often gives an acceptable match ). I can see that in the rounding process this is overestimated, and if I trained another model I would experiment with prehaps moving the offsets decimal point so there is x10 the information, and maybe even trying having multiple colour-spaces so it’s trained on two rather than one image pair. Initial test also show that having two inference nodes reathet than just one (the first only for multiply) produced a better result.

- The tool often mistakes large areas of color as part of the temperature of the image, and then tints the image with that. i.e. see how if the target is the green leaves image, all the resulting images are turned green. This is obviously an issue with blue & green screens. Although, again is greatly improved when doing addition training on a representative set of images in the sequence. I will next test having the model trained on two rather than one image pair, where the second pair is not a selected crop. Initial tests did show improvement.

- Lastly I think it would make sense test a train. That, rather than it being random rgb values of a grade within a threshold. That the numbers hare weighted more towards temperature and exposure, as that is what would be more common.

I hope this is useful for anyone else also trying to solve this puzzle. When I get my hands on Nuke again I will run this model again and improve it. If anyone has footage that they own of professional camera footage onset, and can share, please reach out me and we can collaborate.

Thanks to the Foundry for granting me with a trial Nuke licence to be able to produce this work.

Leave a Reply